Last month, Serena Lee was just another bubble tea-addicted New Jersey college student who loved to watch The Office while searching for her future beau on Tinder.

Except, actually, she wasn't. Lee was actually a male Princeton student named Sean who "got bored while cramming for finals and decided to catfish people with the new Snapchat filter."

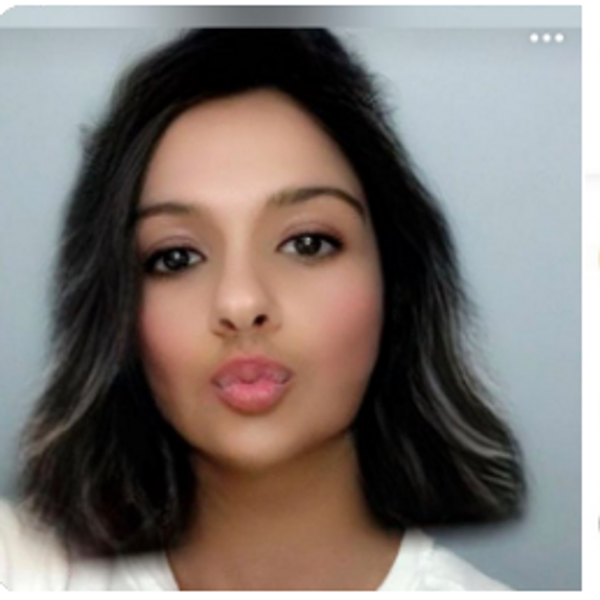

Yep, that one. The one that makes men look like beautiful women and women look like men you'd cross the street to avoid. The one that's so popular that a lot of people who left Snapchat are re-downloading it just to see what the hype is all about. Snapchat's "gender-swapping" filter, which came out in early May of this year, quickly became a social media hit. And Lee wasn't the only one who immediately used it to pose on Tinder — there's a whole trend of catfishing-for-jokes, which consists almost entirely of men pretending to be women.

While on a surface level the filter may seem like harmless fun, its implications, both conceptual and practical, are deeply troubling. Besides spawning this real-life catfishing phenomenon, which tells us nothing new about men online, Snapchat's employment of face alteration technology along the axis of gender enforces stereotypical ideas of male and female appearances, and raises questions about how we should employ such identity-altering (or concealing) technology in the first place.

Face alteration technology like Snapchat's gender-swapping filter is based on relatively recent GAN (generative adversarial network) machine learning technology, which can perform image-to-image translation. In contrast, facial recognition, which doesn't require translating between different images, was possible before the development of GANs. Jonathan Zong, a PhD student in human-computer interaction at MIT whose work often concerns ethics in institutional and technological research, says that the technology behind Snapchat's filter is something that most everyday users are probably not familiar with in detail. One crucial element here is the collection of the input that is required for the output of the filter — the faces that were collected to create it in the first place.

"Data comes from people's bodies. And people's identities are complex, multifaceted, and change over time. They don't conform to scales — as opposed to data, which is inherently numerical."

"People often think that data is just out there," says Zong. "But that's not true. Someone had to collect it."

Exactly which data set was used to create the filter isn't currently public; researchers and techies have been trying to figure it out on their own, but the engineers at Snapchat have been pretty tight-lipped about it.

"Data comes from people's bodies," Zong continues. "And people's identities are complex, multifaceted, and change over time. They don't conform to scales — as opposed to data, which is inherently numerical."

Related | How Afraid of AI Should We Be?

Lee says that his motivations for making a new Tinder alias and keeping up a rigorous swipe-every-three-guys method for four days was mostly for fun and procrastination, but also because he'd heard horror stories from his female friends about what it's like to be a woman on dating apps today. "I knew how gross guys could be, and I wanted to experience it myself…I wanted to call out the men who were like that. Actually hearing it for myself, though — it was disgusting," he said.

Men understand how other men behave on online dating platforms, and yet many of them still want to experience it for themselves? For all the men out there saying, "I feel for you women everywhere if this is what your inboxes actually look like," or "Used the Snapchat filter on Tinder for 30 minutes, and in conclusion I hate men"— this is voyeurism at its best and strangest. While the hope is that now that some men know what it's like to be on the receiving end of such behavior, they'll prevent other men from continuing to act in such ways, this is, let's be honest, highly unlikely.

Zong points out that the catfishing phenomenon also parallels what members of trans communities have been saying about the filter — that it allows those with the most privilege both in real life and online to perform other identities and then retreat back to safety, while women and other marginalized people continue to face harassment online and off.

There's also something else even darker that Snapchat's gender-swapping filter brings to mind: deepfakes, or manipulated figures and faces created by machine learning, in videos — which upon their creation were, somewhat unsurprisingly, immediately adopted for pornography.

"Anything that's AI is not divisible from human labor. Nothing is completely synthetic."

Earlier this year, Zong presented on deepfakes — specifically, on what artificial bodies in pornography might mean for the future — at the Theorizing the Web conference. At first glance, it might seem as though the possibility of artificial bodies in pornography is a good thing: that, in replacing real people with synthetic images, this mitigates the potential for real harm in say, trafficking or exploitation. But Zong disagrees with this view, and rightfully so. "It's not really solving the problem — it's just a subtler version of the problem, one that gets into hairy issues," he says. "These synthetic images were made from a number of people's bodies and faces. In actuality, anything that's AI is not divisible from human labor. Nothing is completely synthetic."

AI-assisted porn and Snapchat's gender-swapping filter aren't the same thing (even if Snapchat's founders did originally create the app for sexting purposes.) But the technology and the social problems underlying them are similar. Nothing is completely synthetic. Chief among these problems is distortion of consent — whether that's for faces and bodies that are actively and visibly co-opted for pornographic purposes, or for faces and bodies that are just as actively but less visibly stored, taken, and used to create a filter for someone else's face. For Snapchat, we're dealing also with unwanted performance of gender, flaunting of gendered privilege, and further entrenchment of stereotypical gendered appearances.

But most importantly, perhaps, is what's happening inside of us. If airport facial recognition technology makes us nervous, or if AI-assisted porn makes us question our standards of consent, why don't our mobile social media apps, which we open and close so easily? We're getting used to having our faces mapped — collected — changed — and owned. And we're getting somewhere close to okay with it, if we're not already there.

Our response to that should be what one user of gender-swap filter aptly said on Twitter, after learning what kind of messages women can receive on Tinder: "Holy fuck, I made a mistake."

Photo via Twitter